| Abstract | Motivation | Algorithm | Results | Paper&Video | FlexiStickers |

Texture mapping has been a fundamental problem in computer graphics from its early days. As online image databases have become increasingly accessible, the ability to texture 3D models using casual images has gained more importance. This will facilitate, for example, the task of texturing models of an animal using any of the hundreds of images of this animal found on the Internet, or enabling a naive user to create personal avatars using the user’s own images.

We present a novel approach for performing texture mapping using casual images, which manages to account for the 3D geometry of the photographed object. Our method overcomes the limitation of both the constrained-parameterization approach, which does not account for the photography effects, and the photogrammetric approach, which cannot handle arbitrary images. The key idea of our algorithm is to formulate the mapping estimation as a Moving-Least-Squares problem for recovering local camera parameters at each vertex. The algorithm is realized in a FlexiStickers application, which enables fast interactive texture mapping using a small number of constraints.

The task of texturing a 3D model from casual images presents two major challenges: First, the algorithm should overcome the differences in pose, proportion and articulation between the photographed object and the model. Second, photography effects presented in the image should be compensated for.

Practically, texturing the model is performed by computing a mapping from the surface to the image. Given user-defined constraints, there are two common approaches to establish this mapping: The Constrained-parameterization approach and the Photogrammetric approach.

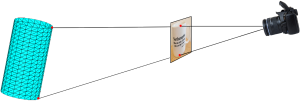

This approach computes the mapping by embedding the mesh onto the image plane, while attempting to satisfy the constraints and minimize a specific geometric distortion metric. Since no prior assumptions regarding the source image and the camera are made, this approach is suitable for casual images. However, inherent distortions might be introduced due to photography effects that result from the viewpoint and the object’s 3D geometry. This is demonstrated in the following image:

In this image, a ”photographed” cylinder (a) is used as a source image, in which the text appears curved and the squares in the center seem wider than those near the silhouettes. These photography effects result from the viewpoint and the object’s 3D geometry. The model is a cylinder with different proportions (b). The result of constrained parameterization (c) strives to minimize the parameterization distortions, ignoring the photography effects. Therfore the resulting texture mapping is incorrect (d).

In this approach, it is assumed that the photographed object is highly similar to the model to be textured and that it was acquired using a camera of a known model (e.g., a pinhole camera). The missing camera parameters are estimated and the recovered camera is used to reproject the model onto the source image, implicitly defining the model–image mapping.

The major advantage of the photogrammetric approach is that it compensates for the photography effects introduced by the camera projection. However, the inherent limitation of the approach is the requirement that the photographed object and the model are similar, prohibiting the use of casual images.

We present a new algorithm for texturing 3D models from casual images. The algorithm provides the advantages of the photogrammetric approach in compensating for the photography effects and the flexibility of the constrained-parameterization approach in using casual images.

The key idea of our algorithm is to perform the photogrammetic approach for texture mapping in a local manner.

In the photogrammetric approach, the model-image mapping is implicitly defined by a camera projection:

![]()

To estimates the unknown camera parameters (e.g. position, orientation, focal length), the user constraints are used. This is performed by findinig the parameters that minimize the constraints projection error:

This approach inherently compensates for the photography distortions. However, it cannot be used with casual images, as the assumption of model-object similarity does not hold for such images.

Instead of estimating a single global camera for the entire model, our algorithm estimates a local camera for each vertex. The model-image mapping is then defined by projecting each vertex using its specific camera:

![]()

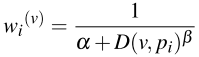

The camera parameters are estimated at each vertex independently by minimizing the weighted constraints projection error:

![]()

where the constraints are weighted non-uniformly on each vertex, by giving higher weight to closer constraints:

This approach is shown to handle casual images as the contrainted-parameterizaion approach, while compensating for the photography effects as done in the photogrammetric approach. More details can be found in the paper.